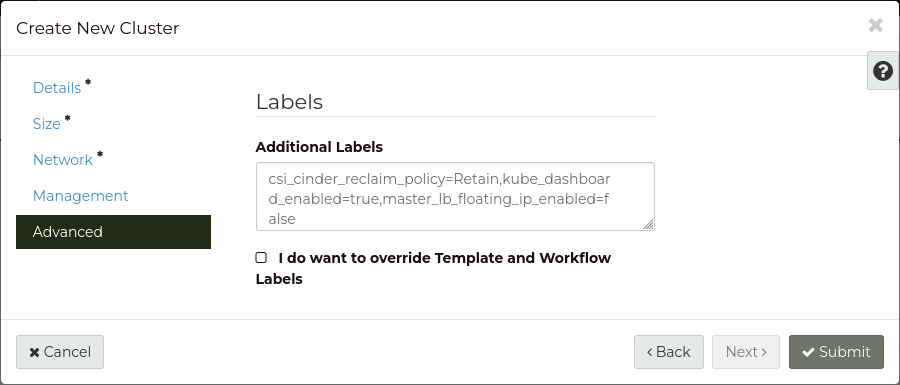

master_lb_floating_ip_enabled

|

Boolean |

false

|

true or false

|

Assign a floating IP to the Kubernetes API load balancer,

to allow access to the Kubernetes API via the public Internet. |

api_master_lb_allowed_cidrs

|

IPv4/IPv6 CIDR |

0.0.0.0/0

|

192.0.2.1/32

|

Specify a set of CIDR ranges that should be allowed to access the Kubernetes API.

Multiple values can be defined (see Specifying multiple label values).

|

extra_network_name

|

String |

null

|

Network Name |

Optional additional network to attach to cluster worker nodes.

Useful for allowing access to external networks from the workers.

|

csi_cinder_reclaim_policy

|

Enumeration |

Retain

|

Retain or Delete

|

Policy for reclaiming dynamically created persistent volumes.

For more information, see Persistent Volume Retention.

|

csi_cinder_fstype

|

Enumeration |

ext4

|

ext4

|

Filesystem type for persistent volumes. |

csi_cinder_allow_volume_expansion

|

Boolean |

true

|

true or false

|

Allows for expansion of volumes by editing the corresponding

PersistentVolumeClaim object. |

kube_dashboard_enabled

|

Boolean |

true

|

true or false

|

Install the Kubernetes Dashboard into the cluster. |

boot_volume_size

|

Integer |

20

|

Greater than 0 |

The size (in GiB) to create the boot volume for Control Plane and Worker nodes.

Currently, this is the only disk attached to nodes.

|

boot_volume_type

|

Enumeration |

b1.sr-r3-nvme-1000

|

See Volume Tiers for a list of volume type names |

The Block Storage volume type name to use for the boot volume. |

auto_scaling_enabled

|

Boolean |

false

|

true or false

|

Enable Worker node auto scaling in the cluster.

When set to true, min_node_count and max_node_count must also be set.

|

min_node_count

|

Integer |

null

|

Greater than 0 |

Minimum number of Worker nodes for auto scaling.

This value is required if auto_scaling_enabled is true.

|

max_node_count

|

Integer |

null

|

Greater than min_node_count |

Maximum number of Worker nodes to scale out to, if auto scaling is enabled.

This value is required if auto_scaling_enabled is true.

|

auto_healing_enabled

|

Boolean |

true

|

true or false

|

Enable auto-healing on control plane and worker nodes.

With auto-healing enabled, if nodes become NotReady for an extended duration they will be

replaced.

Note: Control plane machines will only be remediated one at a time. Worker nodes will not be remediated

if 40% are considered unhealthy, preventing some cascading failures.

|

keystone_auth_enabled

|

Boolean |

true

|

true or false

|

With this option enabled, a deployment will be installed into your cluster allowing the use

of Role-Based Access Control with Catalyst Cloud’s authentication system.

For more information see Role-Based Access Control.

With this option disabled, the admin kubeconfig is still available as well as Kubernetes API Access Control.

|